End-to-end causal inference

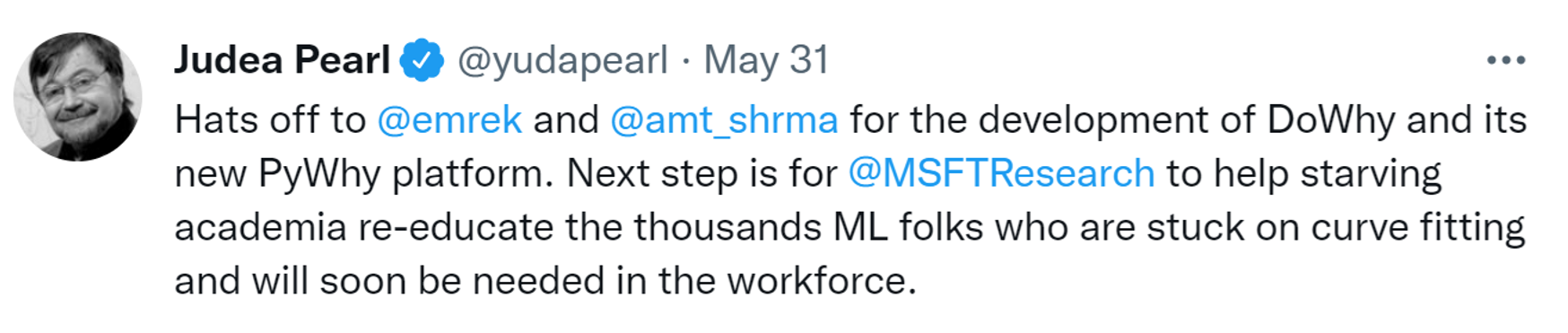

Unlike prediction, causal inference depends critically on assumptions: “no causes in, no causes out”. To help researchers formally state and verify causal assumptions, I built DoWhy (Sharma & Kiciman, 2020), an open-source Python library that conceptualizes causal inference as a four-step process: model, identify, estimate and refute. The library has over three million downloads and is widely used across industry and academia. A key benefit is that Dowhy allows users to verify their causal assumptions using state-of-the-art validation checks. I continue to work on building better methods to validate causal assumptions.

However, two limitations still exist for applying causal methods in practice: 1) reliance on the user to provide a causal graph upfront; 2) complexity of assumptions that make it difficult to choose the right estimation method given a dataset. In late 2022, I stumbled upon an interesting discovery: LLMs are really good at inferring causal relationships from scientific literature and in some cases, can even suggest missing relationships or confounders for a study (Kıcıman et al., 2024). Based on these results, my current research focuses on how LLMs can be used to alleviate both these challenges. See PyWhyLLM for early demos on how LLMs can accelerate the process of building a causal graph and how they can be used to guide a user on different choices during a causal analysis.

In the future, I hope that we can democratize causal analysis and increase its adoption across science and business: users may specify a causal query in natural language and the system can work with them to answer their query. To enable this vision, I also work on fundamental improvements to causal effect estimation and root-cause analysis methods.

Scalable causal effect estimation

- Estimating causal effect of online recommendation systems on our behavior (Sharma et al., 2018; Sharma & Cosley, 2016; Sharma et al., 2015)

- Estimating causal effect of a novel intervention using proxies (Xu et al., 2021)

Scalable root-cause analysis

- Scalable methods for root cause diagnosis (Nagalapatti et al., 2025; Sharma et al., 2022)

Democratizing causal analysis

- Algorithms that can infer causal relationships using both existing knowledge and data (Vashishtha et al., 2025)

References

- Arxiv

- TMLR 2024Causal reasoning and large language models: Opening a new frontier for causalityTransactions on Machine Learning Research, Aug 2024

- Annals Applied Stats.Split-door criterion: Identification of causal effects through auxiliary outcomesThe Annals of Applied Statistics, Dec 2018

- Distinguishing between personal preferences and social influence in online activity feedsIn Proceedings of the 19th ACM Conference on Computer-Supported Cooperative Work & Social Computing, Feb 2016

- Estimating the Causal Impact of Recommendation Systems from Observational DataIn Proceedings of the Sixteenth ACM Conference on Economics and Computation, Jun 2015

- WSDM 2021Split-Treatment Analysis to Rank Heterogeneous Causal Effects for Prospective InterventionsIn Proceedings of the 14th ACM International Conference on Web Search and Data Mining, Mar 2021

- ICLR 2025Robust Root Cause Diagnosis using In-Distribution InterventionsIn The Thirteenth International Conference on Learning Representations, Mar 2025

-

- ICLR 2025Causal Order: The Key to Leveraging Imperfect Experts in Causal InferenceIn The Thirteenth International Conference on Learning Representations (ICLR), Mar 2025