Teaching causal reasoning to language models

Abstract

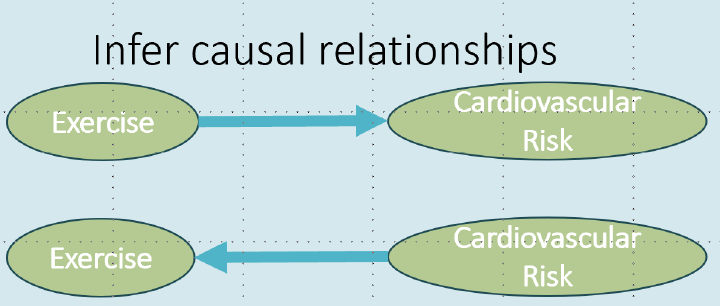

Large language models (LLMs) have demonstrated remarkable accuracy in identifying cause-and-effect relationships across diverse scientific domains. However, their ability to reason over these relationships remains a challenge. To address this, we propose axiomatic training—a novel approach that enhances causal reasoning by teaching LLMs fundamental causal axioms one at a time, rather than fine-tuning them for specific tasks. By training on synthetic demonstrations of axioms such as transitivity and d-separation, we show that models with fewer than 100 million parameters can surpass reasoning capabilities of significantly larger models such as Phi-3, Gemini Pro and GPT-4. Axiomatic training has practical applications as a tool for constructing verifiers for LLM-generated reasoning and for embedding inductive biases into LLM fine-tuning. Moreover, it provides insights into how models like GPT-4, trained solely on observational data, can exhibit advanced reasoning capabilities.