Causal Inference in Recommender Systems

Abstract

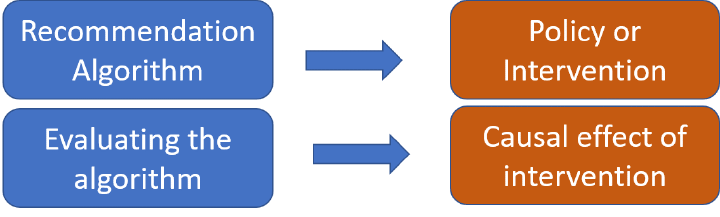

What is the impact of a recommender system? In a typical three-way interaction between users, items and the platform, a recommender system can have differing impacts on the three stakeholders, and there can be multiple metrics based on utility, diversity, and fairness. One way to measure impact is through randomized A/B tests, but experiments are costly and can only be applied for short-term outcomes. This talk describes a unifying framework based on causality that can be used to answer such questions. Using the example of a recommender system's effect on increasing sales for a platform, I will discuss the four steps that form the basis of a causal analysis: modeling the causal mechanism, identifying the correct estimand, estimation, and finally checking robustness of the obtained estimates. Utilizing independence assumptions common in click log data, this process led to a new method for estimating impact of recommendations, called the split-door causal criterion. In the later half of the talk, I will show how the four steps can be used to address otherw questions such as selection bias, missing data, and fairness questions about a recommender system.